This week, we learned how to use web crawlers. Scraper is a special tool that can help us collect desired data on a website, such as video information on a video platform (including page size and duration).Through web crawlers, we can more conveniently and quickly obtain and integrate data. This is very useful for our analysis process. For example, if I want to know which movies are in the top 250 of this year's Douban movies, we can use a crawler to extract the names of each movie.

Here's the process we followed:

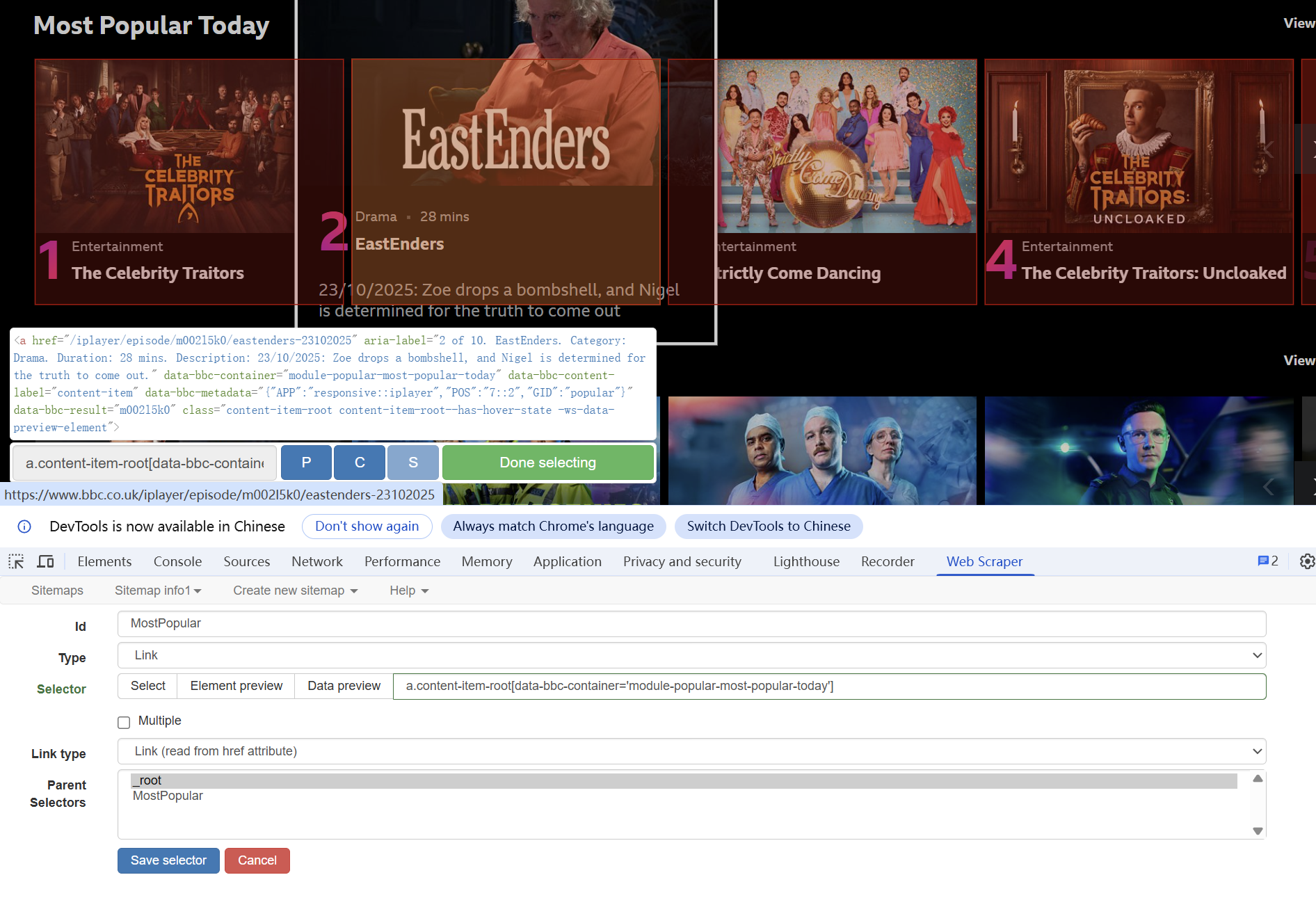

- First, open the scraper tool

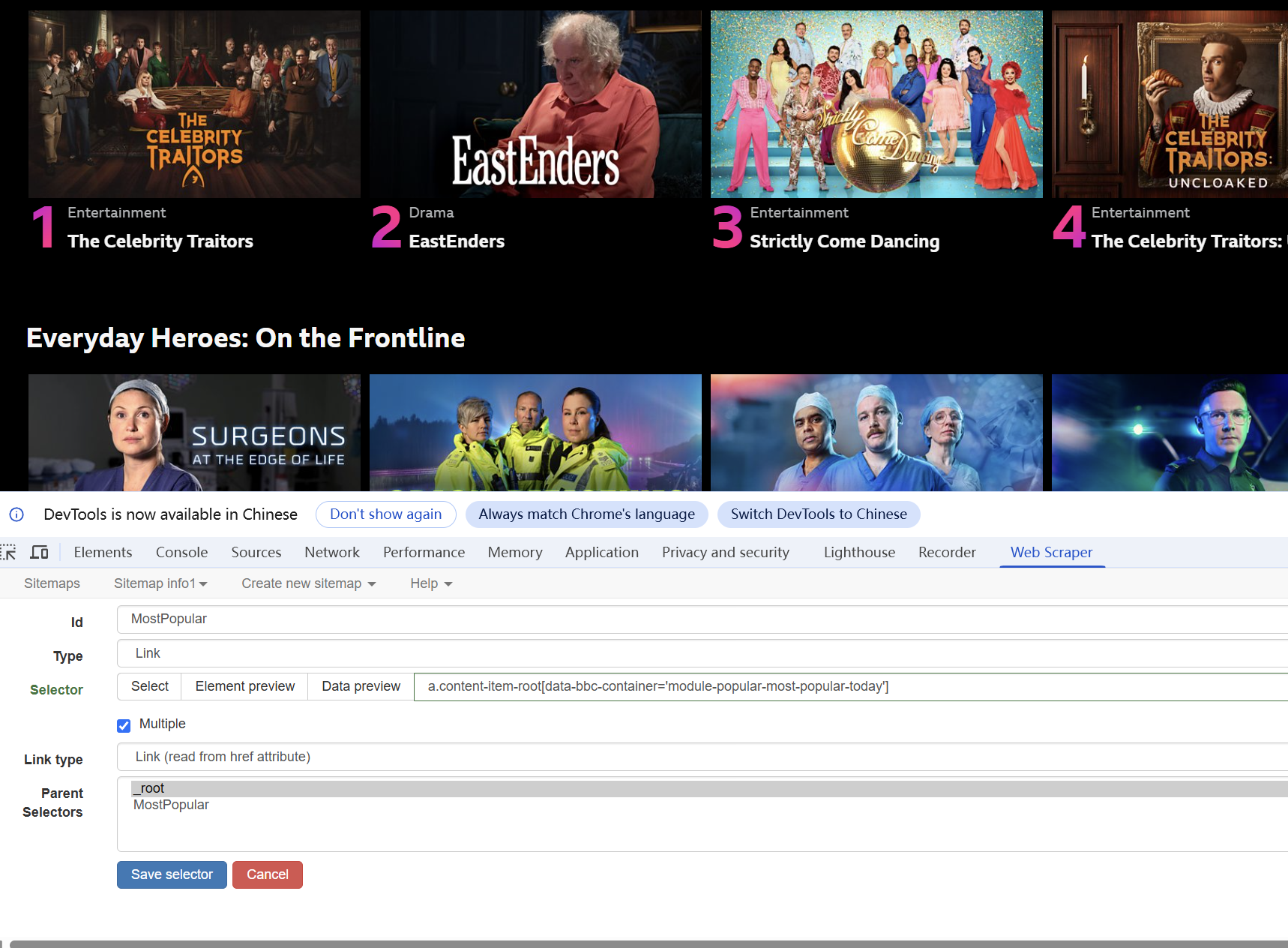

- Create a new sitemap and a new selector, linking the type of data you want to collect

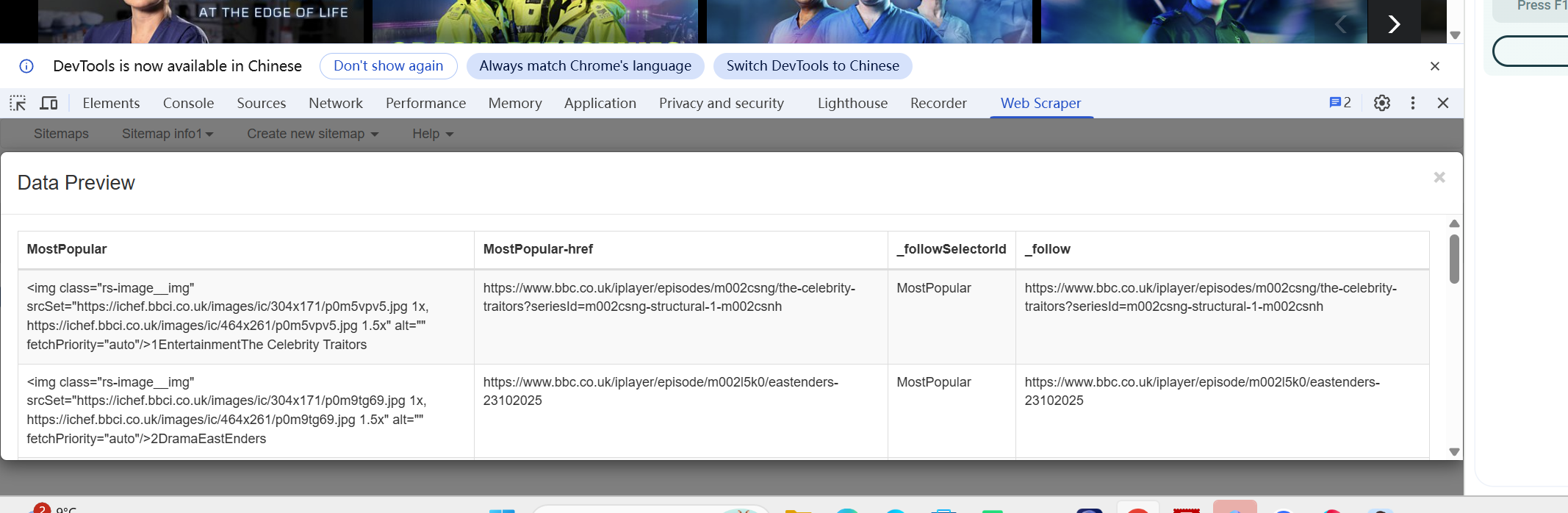

- Save the selector and open the data preview to check the results

However, I believe there are ethical challenges when using web crawlers: Firstly, some data is exclusive to companies and should be respected as proprietary information. Secondly, Unrestricted use of web crawlers may result in significant consumption of server bandwidth and computing resources, leading to obstruction of normal user access.